GeoWerkstatt-Projekt des Monats April 2023

Projekt: Improving 3D person detection algorithm for HoloLens using human pose

Forschende: Vinu Kamalasanan, Yu Feng, Monika Sester

Projektidee: To improve motion detection so as to better influence people walking with AR devices.

A cyclist rushes past from the left, a woman with a dog comes from the right and I look to cross the road safely between the parked cars. Full attention is needed in road traffic, especially where there are many different road users.

Dangerous situations and collisions could possibly be reduced by using technology: In this project we focus on proving that AR headsets (HoloLens) and its 3D visualization could identify potentially dangerous situations and motivate people to walk differently to avoid collisions.

Our project is part of the DFG funded Research Training Group SocialCars. The focus of SocialCars is on addressing challenges that arise when considering the implications of automated mixed traffic scenarios and novel mobility services for dynamic traffic management.

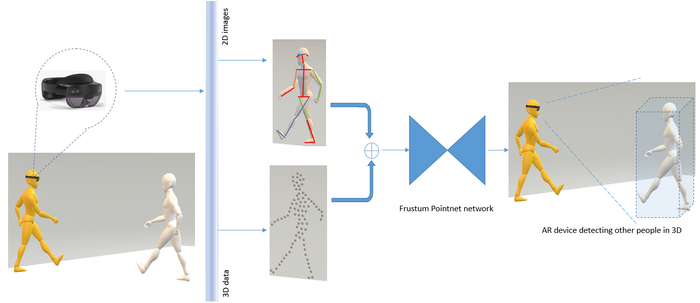

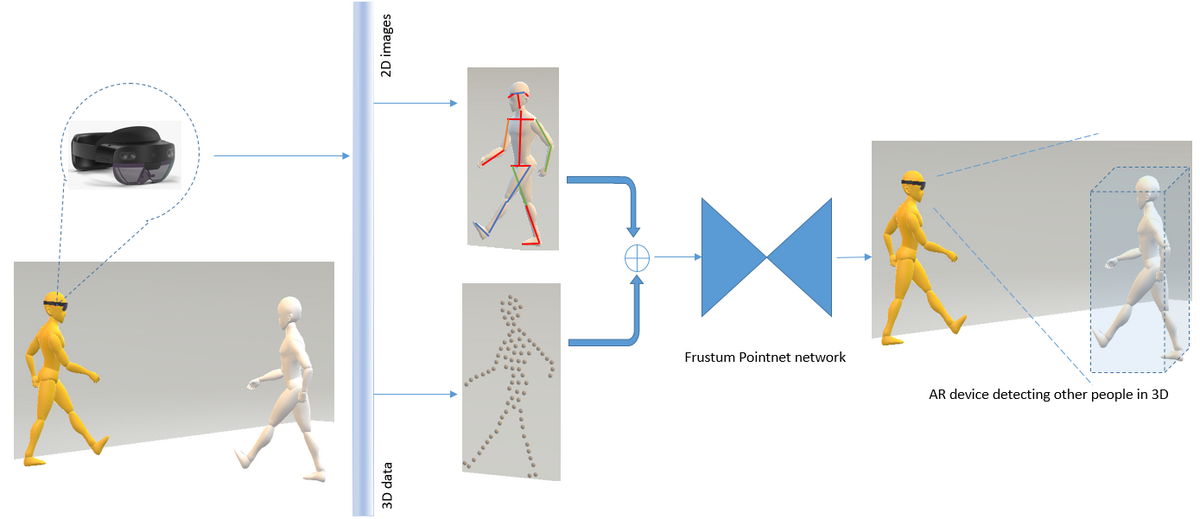

In our project, we focus on the detection of road users around us using the HoloLens and on how we are able to improve it. For this approach, we use the cameras and 3D sensors on the HoloLens to understand surrounding pedestrian movements.

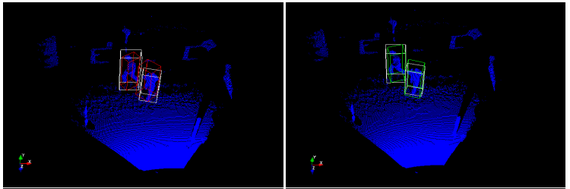

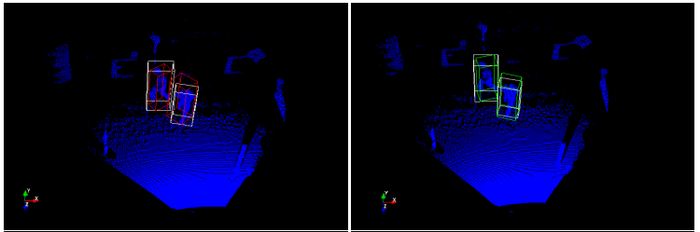

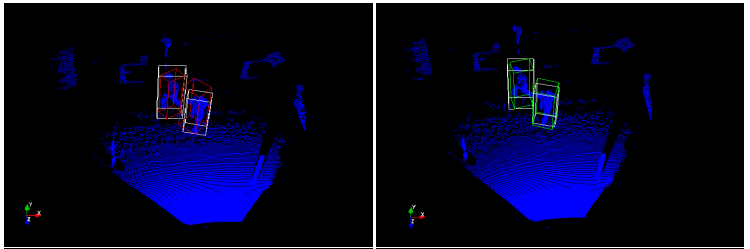

Neural network algorithms that are proven in autonomous driving and robotics help us to find and identify humans from sensor data. The deep learning method Frustum Pointnet, uses the data from both image and 3D depth sensors to identify where people are standing and their position in the process.

©

ikg

©

ikg

We extended the published Frustum Pointnet to use also the high-level camera features of the captured person and see if this could improve the accuracy of locating people correctly.

We investigate our improvement idea by selecting and adding some extra human pose from camera images into our detection neural network. As our idea is only to use high-level features, we use and select the features from a high performing “Openpose” detector.

The openpose algorithm can identify the body parts (arms, legs, shoulder etc.) of a person for a given camera picture and their positions as 2D points. As a person would have a different length for the joints when he is standing compared to turning right or left, this could be valuable information to improve the position of different people at various points of time in a 3D scene.

Among all the joint features, we found the lengths of shoulder and hip to be more influential to improve detecting people. Moreover, we tried to include all the body parts to see if this might better affect the improvement concept, but that was not the case. Hence, the developed approach to improve human detection using abstract representations of the shoulder and hip were added to the published Frustum Pointnet (see Figure 1).

Also for training, we created a motion dataset (855 frames in total) of pedestrians walking in front of the HoloLens sensors. The human positions where added to the dataset as 3D ground truth box labels using a semi-automated labelling. We trained our improved network and observed the results to note improvements. In addition, to see the level of performance improvement, the published Frustum Pointnet was also trained on the same data and compared.

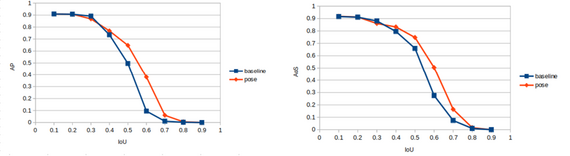

The Intersection over Union (IoU) is a common metric used in object detection comparisions. It is a computed ratio that measures detection accuracy between a predicted box position (including the amount of overlap) to that of its ground truth. This would mean that a higher value of IoU would indicate better accuracy in detecting people from the sensor data.

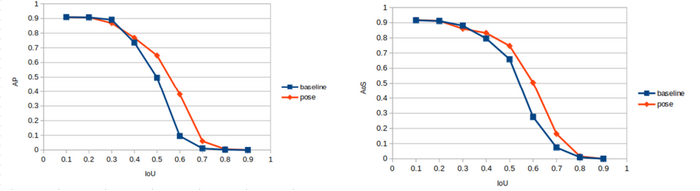

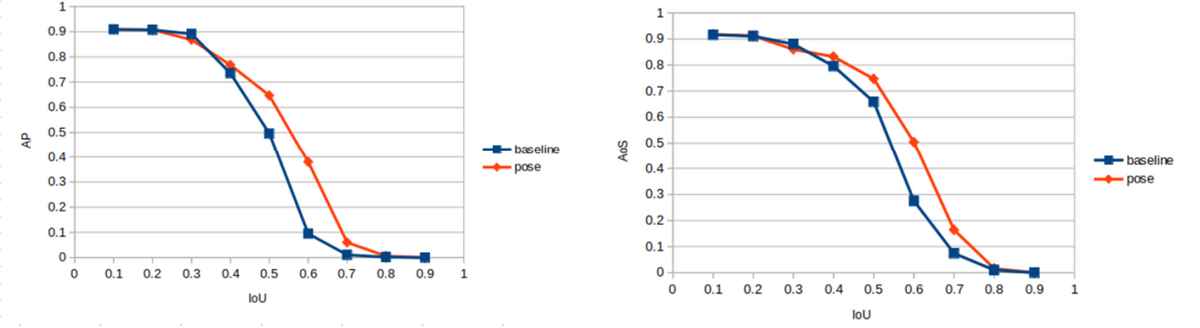

The figure shows the comparison between two metric - AP, AoS for the published network (baseline) vs our improved network (pose). The average precision (AP) metric measures how precise the detections are considering all the detections. Hence, higher values for this metric meant a better detection of people. The second metric is the Average orientation similarity (AoS) that would reflect a higher value if the network correctly identifies the moving direction closer to the ground truth data.

©

ikg

©

ikg

In our tests, we have evaluated the impact of adding the pose data over a range of IoU values to see if the influence these features have is for the more accurately identified positions (considering higher IoU) or for the lower IoU cases. From our evaluations with the addition of features, the higher values for both AP and AoS (Figure 2) indicated a higher performing detector for higher IoU mostly.

This also meant that our features were helping the detector precisely identify humans and improve the prediction of heading directions in detections with lesser position error.

As our approach has only been tested on a small dataset, we have to improve it while also testing it for real time computation, which is part of our ongoing work in the project.

©

ikg

©

ikg